TL;DR

Microsoft Copilot brings real productivity benefits but also opens the door to unintended data exposure. This blog breaks down AI deployment models and then focuses on one specific model, Software as a Service, as it relates to Microsoft Copilot. It explains how Retrieval Augmented Generation (RAG) functions inside Copilot, and how Microsoft Purview and Data Security Posture Management (DSPM) for AI help mitigate the associated risks by protecting the underlying data.

Organizations are moving quickly to adopt AI from automating workflows with Microsoft Copilot to building custom agents in Azure AI Foundry. These tools improve team efficiency, reduce manual work, and unlock new value from existing data.

But with that power comes exposure. AI changes how data is accessed, aggregated, and interpreted. If you have not updated your security model to match, you are already behind.

This blog outlines how to secure AI, with a focus on Microsoft Copilot, Microsoft Purview, and the growing need for DSPM.

AI Deployment Models: Who Owns the Risk?

Most organizations are not using just one AI architecture. They are adopting a mix based on flexibility, control, and business need.

Software as a Service AI

Prebuilt tools like Microsoft Copilot or ChatGPT, managed entirely by the vendor. Fast to deploy, but limited visibility or configuration.

Platform as a Service AI

Cloud platforms like Azure AI Foundry or OpenAI APIs allow you to build your own applications using shared infrastructure. Security responsibilities are shared.

Self-Managed AI

Models deployed inside your own infrastructure. Maximum control over data, behavior, and risk. However, they also come with full responsibility for security and compliance.

The tradeoff is simple. More control means more work. As AI scales, that work gets more complex.

AI Categorization: Function Drives Risk

Deployment tells you where the AI lives. But you also need to classify what it does and what threats come with it. Here are four common functional types:

- Productivity AI

- Assistants for writing or reviewing code.

Risk: Intellectual property loss, injection flaws, insecure outputs - Development AI

- Assistants for writing or reviewing code.

Risk: Intellectual property loss, injection flaws, insecure outputs.

- Custom AI Applications

- Make decisions or take action based on logic.

Risk: Shadow AI, misconfigured roles, API abuse. - Autonomous AI Agents

- Built for a specific business case.

Risk: Privilege misuse, operational errors, compliance violations.

This post focuses on Productivity AI, where Microsoft Copilot is a prime example of high impact and high exposure risk.

What Makes Microsoft Copilot Powerful and Risky?

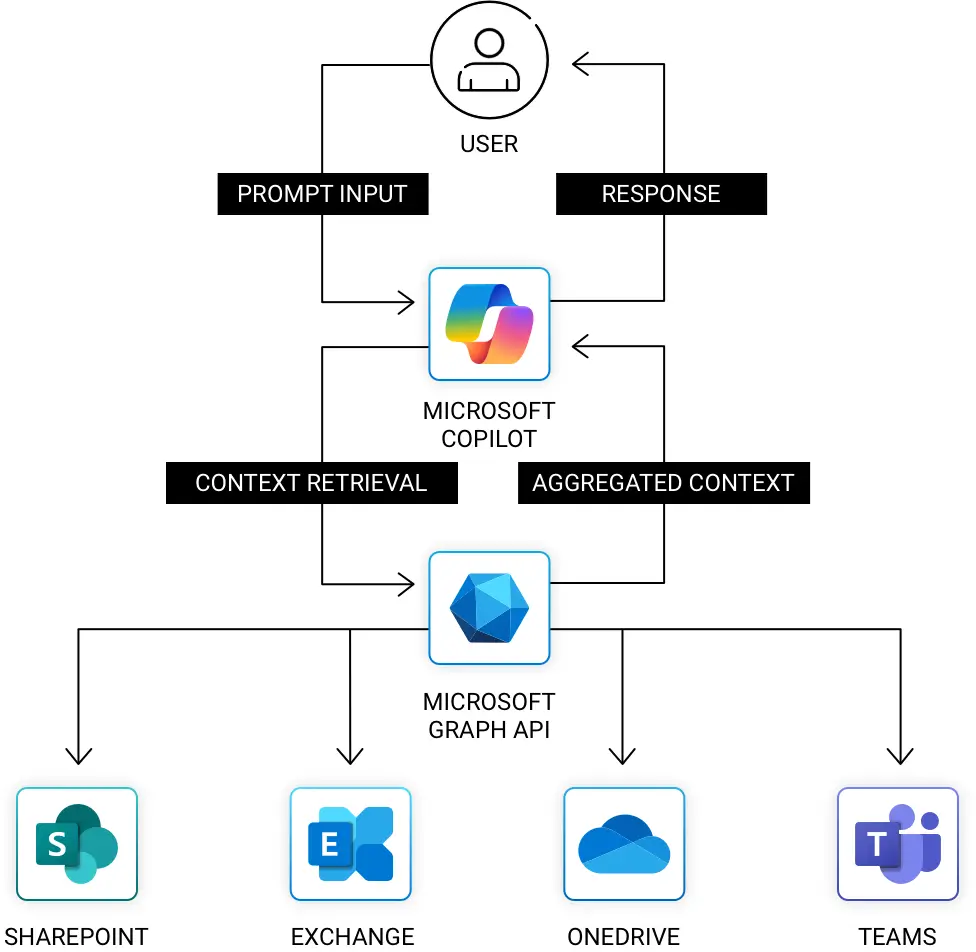

Copilot connects to SharePoint, OneDrive, Exchange, and Teams. It uses content a user can access to generate answers, summaries, and recommendations.

That ability depends on something called Retrieval Augmented Generation (RAG).

What is RAG?

- Retrieval – Copilot looks across Microsoft 365 for relevant content based on the user’s prompt.

- Generation – It passes the retrieved documents to the AI model, which generates a response using that context.

RAG lets AI answer questions using your real content. For example, Copilot might pull a file from OneDrive, a conversation from Teams, and a paragraph from SharePoint and then write a summary or generate a policy draft.

This is incredibly useful, but also easy to misuse or make mistakes.

Since Copilot uses the user’s existing access rights, it can reach anything they can. That includes old files, shared folders with loose permissions, or documents marked as sensitive but not labeled correctly.

The Real Risk: Unstructured Data and Overexposure

The danger is not that Copilot is too smart. The danger is that your environment is too permissive. Most organizations have years of unstructured data in SharePoint, OneDrive, and Exchange. Files are mislabeled. Folders are open to “Everyone.” Sensitive documents live in shared team spaces.

This is where Copilot becomes a problem. It aggregates context across all those systems and hands it to the user in seconds.

Security teams must move past static access reviews. The question is no longer “Can this user open this file?” It is “What can Copilot infer from everything this user can reach?”

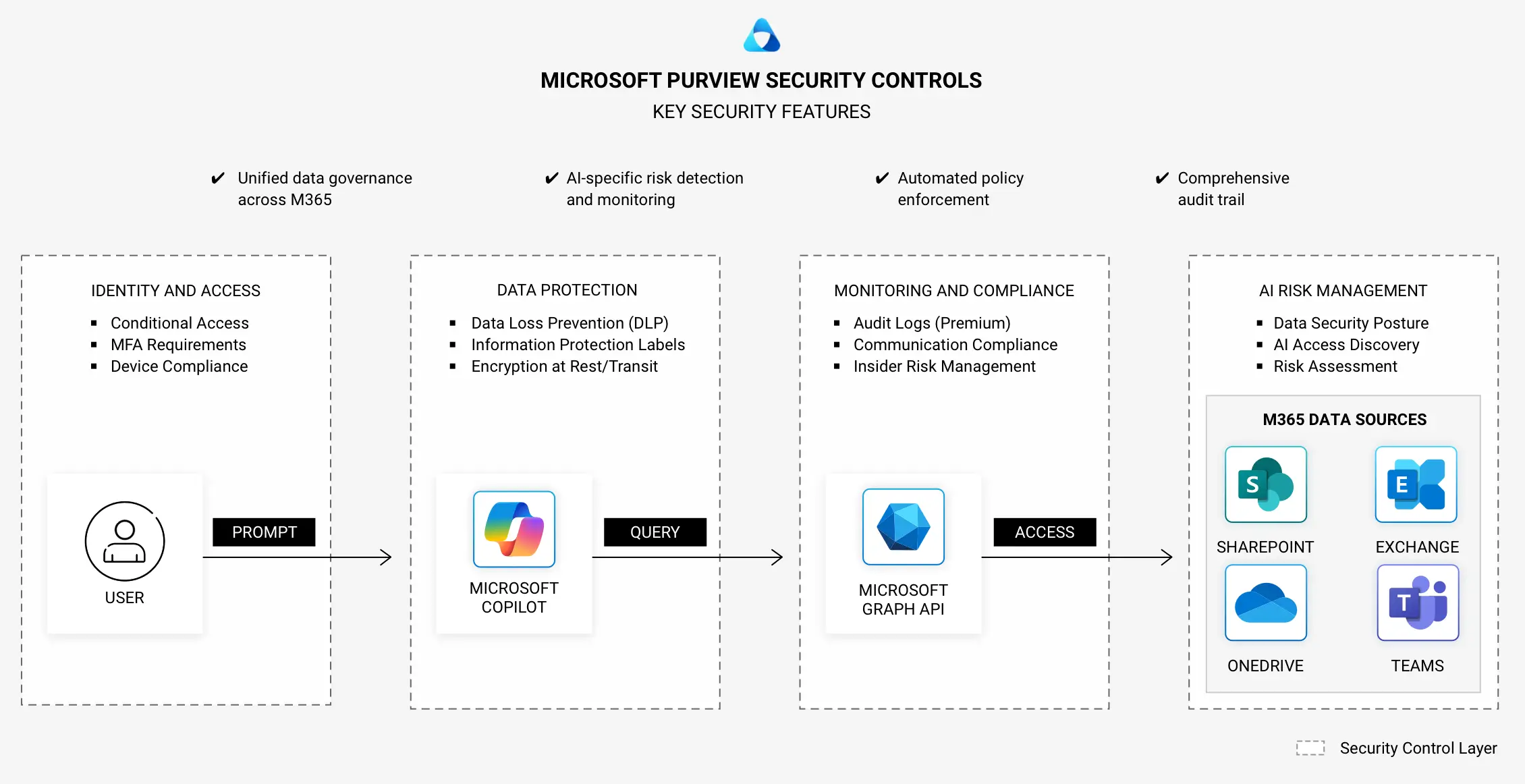

Microsoft Purview: Control at the Data Layer

Microsoft Purview gives organizations a way to govern their data from the inside out. It brings security, compliance, and policy enforcement into the actual content layer before Copilot ever retrieves it.

Automated Discovery and Classification

Purview scans Microsoft 365 environments to identify sensitive content such as personal data, financial records, regulated files. It works with built-in patterns and supports custom ones for your business.

Sensitivity Labels and Enforcement Policies

Labels apply encryption, access restrictions, and usage rules. These policies stop unapproved users from viewing or sharing content. They also apply inside Copilot.

Copilot-Aware Content Filtering

Even if a user has access, Purview can block Copilot from retrieving that data during a prompt. Labels act as a real time checkpoint between the model and sensitive content.

Data Loss Prevention Across Microsoft 365

Purview enforces DLP in Outlook, Teams, SharePoint, and OneDrive. If a user or AI tool tries to move, send, or expose sensitive information, policies trigger alerts or blocks.

Detailed Logging for Audit and Compliance

Every action is recorded. Access, label changes, and all policy violations are visible for investigations, reporting, and continuous improvement.

DSPM for AI: Monitoring the Full Picture

Microsoft Purview also plays a critical role in Data Security Posture Management (DSPM). DSPM is a discipline focused on visibility, classification, and access analysis for unstructured data.

DSPM gives you a full map of where sensitive data exists, who can reach it, and how it moves. With AI in play, that map becomes essential.

DSPM for AI helps you answer questions like:

- What sensitive files can Copilot reach today?

- Where are labeling gaps leaving exposure risk?

- Which users have overbroad access that AI tools could exploit?

Purview brings DSPM and AI security together, giving you the context and control needed to keep Copilot productive without making it dangerous.

Final Word: Secure the Data First

Copilot is not a threat. It is an amplifier.

If your data is well governed, Copilot makes your teams faster. If your data is chaotic and overexposed, Copilot makes your risk larger.

Microsoft Purview and DSPM for AI give security teams a path to protect content across the Microsoft 365 ecosystem. This enables AI to add value while lowering the risk of data exposure or security incidents.

Armor helps organizations implement AI security from the inside out. If you are deploying Copilot or building your AI strategy, we can help you secure it before AI exposes something it shouldn’t.

About Armor

Armor is a global leader in cloud-native managed detection and response. Trusted by over 1,700 organizations across 40 countries, Armor delivers cybersecurity, compliance consulting, and 24/7 managed defense built for transparency, speed, and results. By combining human expertise with AI-driven precision, Armor safeguards critical environments to outpace evolving threats and build lasting resilience. For more information visit our website, follow us on LinkedIn, or request a free Cyber Resilience assessment.