Ever since it gained widespread adoption, cloud computing has always been synonymous with virtualization. But what we’ve all become accustomed to is only one form of virtualization – one that makes virtual machines available directly on top of your traditional hypervisors. Lately however, there’s been a surge in virtualization concepts and one in particular seems to be gaining massive interest in public cloud and even on-premise communities. This concept, which in many cases has proven to be more portable, efficient and secure, is appropriately known as containerization.

In fact, container-based virtualization is almost as old as its hypervisor-based counterpart. However, it’s not gained mainstream adoption in enterprise until recently when Docker introduced developers to an easier way of packaging applications. But while some startups and large enterprises have started taking advantage of containerization, many people (even technical folks) still don’t know what it is. Let me break it down for you.

Containers vs. Virtual Machines

Among other things, containerization was meant to address common issues caused by the throw-it-over-the-wall practices of developers when turning over software to IT operations teams. Oftentimes, these practices caused problems when supporting resources in the production environment differed from those in the development environment.

For instance, development and testing might have been done on Java 7 but the production environment uses Java 9. Or tests may have been conducted on CentOS but the production runs on Red Hat. These differences can cause dependency and other incompatibility issues, making life difficult for both IT and development teams when deploying new software and applications.

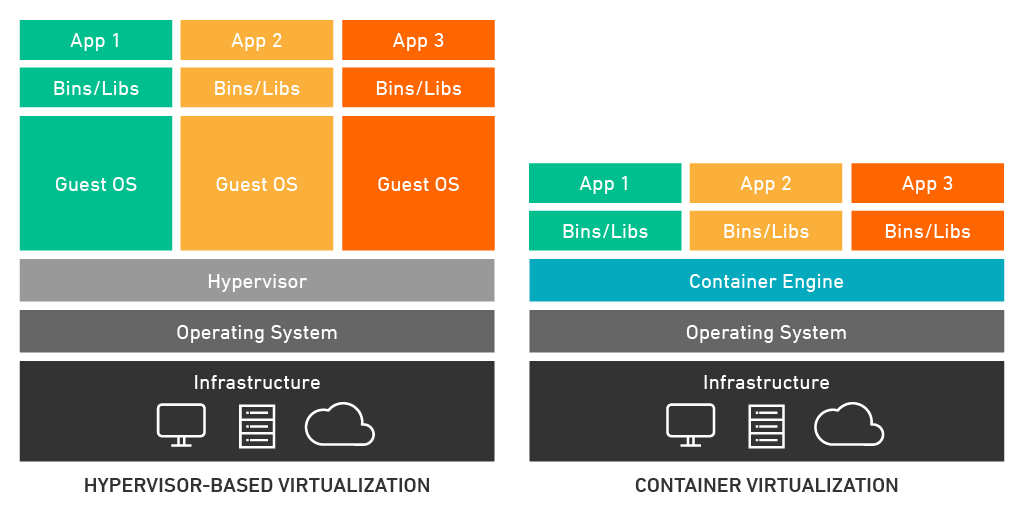

Traditional hypervisor-based virtualization techniques commonly employed in on-premise and cloud IT infrastructures address these problems today, right? That’s what I thought when containers began to gain steam. A virtual machine does consist of all the necessary elements (the application itself, runtime environment, operating system (OS), etc.) that enables an application to run in both development and production environments. IT folks only need to make sure they have the right hypervisor to support the VM.

While both virtual machines and containers enable application portability, containers are significantly lighter and thus, more portable. The main reason is that, unlike VMs, containers no longer include an OS and its associated kernel, i.e. the part of the OS that gets loaded first upon boot up and eventually takes charge of memory, disk, process and task management. In containerization, a single kernel resides in the host OS and is simply shared among containers. All that’s housed in a container is the application code and its dependencies.

The absence of a kernel benefits containers in several ways. First, container sizes can be as small as 10 megabytes, whereas virtual machines can easily exceed 10 gigabytes (a Windows Server 2016 VM, for instance, could be about 32 GB).

Secondly, the memory requirements of containers are significantly lower than those of virtual machines. And it’s not just about the size of memory VMs consume, which must be comparatively larger to accommodate a kernel, to begin with. Rather, it’s also how early in their lifecycle VMs consume those memory resources. Virtual machines grab the amount of memory that’s been allocated for them upon boot up, regardless of whether they already need it or not.

And third, no kernel means faster boot times. A typical virtual machine would require a few minutes to boot due to its kernel, while some containers would require only less than a second.

More Key Characteristics

Containers and runtime

Earlier, we mentioned that containers typically include the application and its dependencies. These ‘dependencies’ actually refer to its libraries and binaries, as well as its runtime environment. The inclusion of an application’s runtime environment or runtime in a container is the key reason why it can be extremely portable.

Each software application is written in a specific programming language (e.g. Java, C++, .NET). So, when developers want to issue certain instructions to the underlying hardware (through the kernel), they write them using their programming language of choice. Even if they want to issue the same instructions to the same piece of hardware, each developer will have to write those instructions differently.

A Java developer would issue the instruction in Java code, a C++ developer in C++ code, a .NET developer in .NET code, and so on. So how does the underlying kernel, and in turn the hardware, interpret those instructions? Well, just like humans with no common language trying to communicate with one another, they require a translator or interpreter.

In computers, it’s the runtime that takes on this task. Because containers already include both the application and runtime, they can run, regardless of the version or language of the underlying platform. For example, a container can run on either a new or old version of CentOS, as well as run on either Ubuntu or Red Hat.

Inherent security through immutability

Another important characteristic of containers is that they’re immutable; meaning, a running container can’t be changed. If you need to make some changes in your application code, you’ll need to bring down the existing container image from production, implement the changes and save that as a new version, and then run that new version.

This characteristic alone has huge implications from a security standpoint. This means, it would be almost impossible for an attacker to tamper with a running container. And in worst case scenario, if an attacker does succeed in modifying a running container, you’ll be able to detect it because it would no longer behave the way it’s supposed to.

Business Benefits of Containers

Containers are a godsend to several members of the enterprise. First, they eliminate certain problems associated with software deployment, thereby making life easier for IT operations and development teams, enabling a more seamless DevOps culture. The extra hours these teams gain can then be used for more critical business-impacting tasks. In addition, the ease of deploying software as a byproduct boosts production and speeds up the go-to-market timeline.

More importantly, for CFOs, board members and other stakeholders, containers bring down costs through increased density (i.e. you can run a larger number of containers than virtual machines on the same hardware) brought about by smaller storage and memory footprints. Increased density, in turn, translates to better scalability

Some technologies are just ahead of their time. But while most of these technologies go by the wayside and eventually disappear into oblivion, a few of them survive long enough to be relevant and even gain prominence. Containers are among these few. And as it stands, containerization is the future of cloud computing.

In a future post, I’ll address the security aspects of containers and how they relate to cloud environments.

Stay tuned, and as always… Fight the good fight.